由 SuKai January 23, 2022

前面文章讲了client-go, go-restful开发Kubernetes应用平台,今天给大家看看在这个应用平台中添加一个自定义资源控制器的开发。

需求场景:

在多租户机器学习平台中,开发一个Kubernetes控制器,实现CRD(自定义资源) TrackingServer的调谐,完成Kubernetes中对应的PersistentVolumeClaim, TLS Secret, Service, Ingress, Deployment资源管理。

功能描述:

1,当CR实例的参数中指定了VolumeSize和StorageClassName,则创建对应的PersistentVolumeClaim用于MLflow的local database sqllite的数据存储目录。当未指定时,不创建或者删除已经创建的PersistentVolumeClaim。

2,当CR实例的参数中指定了Cert和Key数据,则创建对应的TLS类型的Secret,用于Ingress的TLS证书。当未指定时,不创建或删除对应Secret。

3,查找对应命名空间和名称的Secret,如果有Ingress配置对应的TLS证书。

4,根据CR实例的参数管理Service和Deployment的创建和修改。

5,删除CR实例后,对应清理K8S资源。当删除资源时,判断被删除资源是否为CR实例的附属资源。

代码实现:

整个业务代码开发分为几个大的步骤:

1,Kubebuilder生成代码和部署文件

2,在Controller Manager中注册控制器

3,在控制器调谐代码中,实现业务逻辑

Kubebuilder中创建API

指定GVK,这里TrackingServer为我们需要的MLflow资源。

kubebuilder create api --group experiment --version v1alpha2 --kind TrackingServer

kubebuilder create api --group experiment --version v1alpha2 --kind JupyterNotebook

kubebuilder create api --group experiment --version v1alpha2 --kind CodeServer

自定义资源TrackingServer定义

定义VolumeSize, Cert, Key字段为omitempty,表示非必须字段。

+genclient表示代码生成器生成clientset,informer, lister代码。

printcolumn表示kubectl get资源时展示字段

// TrackingServerSpec defines the desired state of TrackingServer

type TrackingServerSpec struct {

// INSERT ADDITIONAL SPEC FIELDS - desired state of cluster

// Important: Run "make" to regenerate code after modifying this file

Size int32 `json:"size"`

Image string `json:"image"`

S3_ENDPOINT_URL string `json:"s3_endpoint_url"`

AWS_ACCESS_KEY string `json:"aws_access_key"`

AWS_SECRET_KEY string `json:"aws_secret_key"`

ARTIFACT_ROOT string `json:"artifact_root"`

BACKEND_URI string `json:"backend_uri"`

URL string `json:"url"`

VolumeSize string `json:"volumeSize,omitempty"`

StorageClassName string `json:"storageClassName,omitempty"`

Cert string `json:"cert,omitempty"`

Key string `json:"key,omitempty"`

}

// TrackingServerStatus defines the observed state of TrackingServer

type TrackingServerStatus struct {

// INSERT ADDITIONAL STATUS FIELD - define observed state of cluster

// Important: Run "make" to regenerate code after modifying this file

}

// +genclient

// +kubebuilder:object:root=true

// +kubebuilder:subresource:status

// +kubebuilder:printcolumn:name="S3_ENDPOINT_URL",type="string",JSONPath=".spec.s3_endpoint_url"

// +kubebuilder:printcolumn:name="ARTIFACT_ROOT",type="string",JSONPath=".spec.artifact_root"

// TrackingServer is the Schema for the trackingservers API

type TrackingServer struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec TrackingServerSpec `json:"spec,omitempty"`

Status TrackingServerStatus `json:"status,omitempty"`

}

Kubebuilder生成代码脚手架

生成CRD, Sample的YAML文件。

make generate

make manifests

注册控制器

IngressController配置使用的是Nginx还是Traefik ingress controller。Recorder生成k8s事件。TraefikClient为traefik的clientset接口库。

trackingserverReconciler := &trackingserver.TrackingServerReconciler{IngressController: s.IngressController, TraefikClient: kubernetesClient.Traefik()}

if err = trackingserverReconciler.SetupWithManager(mgr); err != nil {

klog.Fatalf("Unable to create trackingserver controller: %v", err)

}

type TrackingServerReconciler struct {

client.Client

TraefikClient traefikclient.Interface

Logger logr.Logger

Recorder record.EventRecorder

IngressController string

MaxConcurrentReconciles int

}

for指定为哪个CRD资源进行调谐,对应CR的操作事件都会进入这个控制器。

// SetupWithManager sets up the controller with the Manager.

func (r *TrackingServerReconciler) SetupWithManager(mgr ctrl.Manager) error {

if r.Client == nil {

r.Client = mgr.GetClient()

}

r.Logger = ctrl.Log.WithName("controllers").WithName(controllerName)

if r.Recorder == nil {

r.Recorder = mgr.GetEventRecorderFor(controllerName)

}

if r.MaxConcurrentReconciles <= 0 {

r.MaxConcurrentReconciles = 1

}

return ctrl.NewControllerManagedBy(mgr).

Named(controllerName).

WithOptions(controller.Options{

MaxConcurrentReconciles: r.MaxConcurrentReconciles,

}).

For(&experimentv1alpha2.TrackingServer{}).

Complete(r)

}

调谐主入口

首先r.Get(rootCtx, req.NamespacedName, trackingServer)通过名称查找到TrackingServer的资源实例。

根据trackingServer.ObjectMeta.DeletionTimestamp.IsZero()判断是否为删除操作,当CR资源实例为删除操作时,k8s会将资源的DeletionTimestamp删除时间戳设置为删除时间。当资源为删除操作时,移除finalizer。

finnalizertrackingServer.ObjectMeta.Finalizers = sliceutil.RemoveString(trackingServer.ObjectMeta.Finalizers, func(item string) bool {

return item == finalizer

})

在k8s中Finalizer对资源的删除操作进行控制,一个k8s资源在删除时,首先标记为deletion状态(设置DeletionTimestamp),设置后此资源为只读状态。当此资源的控制器完成对应资源清理后,控制器移除掉finalizer,表示k8s可以真正的删除此资源。所以当移除了finalizer后,此次调谐过程就应该结束返回了。

当资源为非删除操作时,检查是否存在Finalizer,不存在就添加finalizer。

下面就是reconcilePersistentVolume, reconcileSecret, reconcilePersistentVolume …等资源的调谐。

func (r *TrackingServerReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

logger := r.Logger.WithValues("trackingServer", req.NamespacedName)

rootCtx := context.Background()

trackingServer := &experimentv1alpha2.TrackingServer{}

if err := r.Get(rootCtx, req.NamespacedName, trackingServer); err != nil {

return ctrl.Result{}, client.IgnoreNotFound(err)

}

if trackingServer.ObjectMeta.DeletionTimestamp.IsZero() {

if !sliceutil.HasString(trackingServer.ObjectMeta.Finalizers, finalizer) {

trackingServer.ObjectMeta.Finalizers = append(trackingServer.ObjectMeta.Finalizers, finalizer)

if err := r.Update(rootCtx, trackingServer); err != nil {

return ctrl.Result{}, err

}

}

} else {

if sliceutil.HasString(trackingServer.ObjectMeta.Finalizers, finalizer) {

trackingServer.ObjectMeta.Finalizers = sliceutil.RemoveString(trackingServer.ObjectMeta.Finalizers, func(item string) bool {

return item == finalizer

})

logger.V(4).Info("update trackingServer")

if err := r.Update(rootCtx,trackingServer); err != nil {

logger.Error(err, "update trackingServer failed")

return ctrl.Result{}, err

}

}

return ctrl.Result{}, nil

}

if err := r.reconcilePersistentVolume(rootCtx, logger, trackingServer); err != nil {

r.Recorder.Event(trackingServer, corev1.EventTypeWarning, failedSynced, fmt.Sprintf(syncFailMessage, err))

return reconcile.Result{}, err

}

if err := r.reconcileSecret(rootCtx, logger, trackingServer); err != nil {

r.Recorder.Event(trackingServer, corev1.EventTypeWarning, failedSynced, fmt.Sprintf(syncFailMessage, err))

return reconcile.Result{}, err

}

if err := r.reconcileDeployment(rootCtx, logger, trackingServer); err != nil {

r.Recorder.Event(trackingServer, corev1.EventTypeWarning, failedSynced, fmt.Sprintf(syncFailMessage, err))

return reconcile.Result{}, err

}

if err := r.reconcileService(rootCtx, logger, trackingServer); err != nil {

r.Recorder.Event(trackingServer, corev1.EventTypeWarning, failedSynced, fmt.Sprintf(syncFailMessage, err))

return reconcile.Result{}, err

}

if err := r.reconcileIngress(rootCtx, logger, trackingServer); err != nil {

r.Recorder.Event(trackingServer, corev1.EventTypeWarning, failedSynced, fmt.Sprintf(syncFailMessage, err))

return reconcile.Result{}, err

}

r.Recorder.Event(trackingServer, corev1.EventTypeNormal, successSynced, messageResourceSynced)

return ctrl.Result{}, nil

}

调谐的逻辑很简单,在k8s里资源有两个状态期望态,当前运行态,调谐就是不断将资源从运行态调整为期望态的过程。下面我们就看一下Deployment和Ingress的调谐。

Deployment

代码中,首先expectDployment生成一个期望的Deployment资源,查找k8s当前的对应名称的资源currentDeployment。如果不存在则创建expectDployment。如果存在currentDeployment,则比较expectDployment,currentDeployment是否参数一致,不一致则更新currentDeployment为expectDployment期望值。

controllerutil.SetControllerReference设置deployment的OwnerReference为tackingserver实例。

k8s中设置OwnerReference表示一个资源附属于另一个资源。当一个资源删除时,其附属资源将会被k8s删除,这是k8s垃圾资源回收机制。

func (r *TrackingServerReconciler) reconcileDeployment(ctx context.Context, logger logr.Logger, instance *experimentv1alpha2.TrackingServer) error {

expectDployment := newDeploymentForTrackingServer(instance)

if err := controllerutil.SetControllerReference(instance, expectDployment, scheme.Scheme); err != nil {

logger.Error(err, "set controller reference failed")

return err

}

currentDeployment := &appsv1.Deployment{}

if err := r.Get(ctx, types.NamespacedName{Name: instance.Name, Namespace: instance.Namespace}, currentDeployment); err != nil {

if errors.IsNotFound(err) {

logger.V(4).Info("create trackingserver deployment", "trackingserver", instance.Name)

if err := r.Create(ctx, expectDployment); err != nil {

logger.Error(err, "create trackingserver deployment failed")

return err

}

return nil

}

logger.Error(err, "get trackingserver deployment failed")

return err

} else {

if !reflect.DeepEqual(expectDployment.Spec, currentDeployment.Spec) {

currentDeployment.Spec = expectDployment.Spec

logger.V(4).Info("update trackingserver deployment", "trackingserver", instance.Name)

if err := r.Update(ctx, currentDeployment); err != nil {

logger.Error(err, "update trackingserver deployment failed")

return err

}

}

}

return nil

}

newDeploymentForTrackingServer生成deployment

func newDeploymentForTrackingServer(instance *experimentv1alpha2.TrackingServer) *appsv1.Deployment {

replicas := instance.Spec.Size

labels := labelsForTrackingServer(instance.Name)

deployment := &appsv1.Deployment{

ObjectMeta: metav1.ObjectMeta{

Name: instance.Name,

Namespace: instance.Namespace,

},

Spec: appsv1.DeploymentSpec{

Replicas: &replicas,

Selector: &metav1.LabelSelector{

MatchLabels: labels,

},

Template: corev1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: labels,

},

Spec: corev1.PodSpec{

Containers: []corev1.Container{

{

Image: instance.Spec.Image,

Name: "mlflow",

ImagePullPolicy: corev1.PullIfNotPresent,

Ports: []corev1.ContainerPort{{

ContainerPort: 5000,

Name: "server",

}},

Env: []corev1.EnvVar{

{

Name: "MLFLOW_TRACKING_URI",

Value: instance.Spec.URL,

},

{

Name: "MLFLOW_S3_ENDPOINT_URL",

Value: instance.Spec.S3_ENDPOINT_URL,

},

{

Name: "AWS_ACCESS_KEY_ID",

Value: instance.Spec.AWS_ACCESS_KEY,

},

{

Name: "AWS_SECRET_ACCESS_KEY",

Value: instance.Spec.AWS_SECRET_KEY,

},

{

Name: "ARTIFACT_ROOT",

Value: instance.Spec.ARTIFACT_ROOT,

},

{

Name: "BACKEND_URI",

Value: instance.Spec.BACKEND_URI,

},

},

},

},

},

},

},

}

if instance.Spec.VolumeSize != "" && instance.Spec.StorageClassName != "" {

deployment.Spec.Template.Spec.Volumes = []corev1.Volume{

{

Name: "trackingserver-sqllite-data",

VolumeSource: corev1.VolumeSource{

PersistentVolumeClaim: &corev1.PersistentVolumeClaimVolumeSource{

ClaimName: instance.Name,

},

},

},

}

deployment.Spec.Template.Spec.Containers[0].VolumeMounts = []corev1.VolumeMount{

{

Name: "trackingserver-sqllite-data",

MountPath: "/mlflow",

},

}

}

return deployment

}

Ingress

判断IngressController,调用对应的资源调谐。

func (r *TrackingServerReconciler) reconcileIngress(ctx context.Context, logger logr.Logger, instance *experimentv1alpha2.TrackingServer) error {

secret := &corev1.Secret{}

if err := r.Get(ctx, types.NamespacedName{Name: instance.Name, Namespace: instance.Namespace}, secret); err != nil {

if !errors.IsNotFound(err) {

return err

}

secret = nil

}

if r.IngressController == "traefik" {

if err := r.reconcileTraefikRoute(ctx, logger, instance, secret); err != nil {

return err

}

return nil

}

if r.IngressController == "nginx" {

if err := r.reconcileNginxIngress(ctx, logger, instance, secret); err != nil {

return err

}

return nil

}

return nil

}

这里我们使用的Traefik的IngressRoute和Middleware来配置Ingress,代码逻辑上基本和Deployment一致。这里有一个特别的IngressRoute, Middleware也是CRD资源,不能通过reconciler的client直接创建对应的资源。所以这里使用了traefik的clientset库,完成接口操作。

_, err = r.TraefikClient.TraefikV1alpha1().Middlewares(instance.Namespace).Create(ctx, expectMiddleware, metav1.CreateOptions{})

_, err = r.TraefikClient.TraefikV1alpha1().Middlewares(instance.Namespace).Update(ctx, currentMiddleware, metav1.UpdateOptions{})

func (r *TrackingServerReconciler) reconcileTraefikRoute(ctx context.Context, logger logr.Logger, instance *experimentv1alpha2.TrackingServer, secret *corev1.Secret) error {

parsedUrl, err := url.Parse(instance.Spec.URL)

if err != nil {

logger.Error(err, "parse url failed")

return err

}

expectIngressRoute := ingressRouteForTrackingServer(instance, secret, parsedUrl)

if err := controllerutil.SetControllerReference(instance, expectIngressRoute, scheme.Scheme); err != nil {

logger.Error(err, "set controller reference failed")

return err

}

currentIngressRoute, err := r.TraefikClient.TraefikV1alpha1().IngressRoutes(instance.Namespace).Get(ctx, instance.Name, metav1.GetOptions{})

if err != nil {

if errors.IsNotFound(err) {

_, err = r.TraefikClient.TraefikV1alpha1().IngressRoutes(instance.Namespace).Create(ctx, expectIngressRoute, metav1.CreateOptions{})

if err != nil {

logger.Error(err, "create IngressRoute failed")

return err

}

} else {

return err

}

} else {

if !reflect.DeepEqual(expectIngressRoute.Spec, currentIngressRoute.Spec) {

currentIngressRoute.Spec = expectIngressRoute.Spec

_, err = r.TraefikClient.TraefikV1alpha1().IngressRoutes(instance.Namespace).Update(ctx, currentIngressRoute, metav1.UpdateOptions{})

if err != nil {

logger.Error(err, "update IngressRoute failed")

return err

}

}

}

expectMiddleware := middlewareForTrackingServer(instance, parsedUrl)

if err := controllerutil.SetControllerReference(instance, expectMiddleware, scheme.Scheme); err != nil {

logger.Error(err, "set controller reference failed")

return err

}

currentMiddleware, err := r.TraefikClient.TraefikV1alpha1().Middlewares(instance.Namespace).Get(ctx, instance.Name, metav1.GetOptions{})

if err != nil {

if errors.IsNotFound(err) {

_, err = r.TraefikClient.TraefikV1alpha1().Middlewares(instance.Namespace).Create(ctx, expectMiddleware, metav1.CreateOptions{})

if err != nil {

logger.Error(err, "create Middlewares failed")

return err

}

} else {

logger.Error(err, "get Middlewares failed")

return err

}

} else {

if !reflect.DeepEqual(expectMiddleware.Spec, currentMiddleware.Spec) {

currentMiddleware.Spec = expectMiddleware.Spec

_, err = r.TraefikClient.TraefikV1alpha1().Middlewares(instance.Namespace).Update(ctx, currentMiddleware, metav1.UpdateOptions{})

if err != nil {

logger.Error(err, "update Middlewares failed")

return err

}

}

}

return nil

}

生成ingressroute和middleware

func ingressRouteForTrackingServer(instance *experimentv1alpha2.TrackingServer, secret *corev1.Secret, parsedUrl *url.URL) *traefikv1alpha1.IngressRoute {

ingressRoute := &traefikv1alpha1.IngressRoute{

ObjectMeta: metav1.ObjectMeta{

Name: instance.Name,

Namespace: instance.Namespace,

},

Spec: traefikv1alpha1.IngressRouteSpec{

EntryPoints: []string{"web"},

},

}

if secret != nil {

ingressRoute.Spec.EntryPoints = []string{"websecure","web"}

ingressRoute.Spec.TLS = &traefikv1alpha1.TLS{

SecretName: instance.Name,

}

}

if parsedUrl.Path == "" {

ingressRoute.Spec.Routes = append(ingressRoute.Spec.Routes, traefikv1alpha1.Route{

Match: fmt.Sprintf("Host(`%s`)", parsedUrl.Host),

Kind: "Rule",

Services: []traefikv1alpha1.Service{

{

LoadBalancerSpec: traefikv1alpha1.LoadBalancerSpec{

Name: instance.Name,

Port: intstr.FromString("server"),

},

},

},

})

} else {

ingressRoute.Spec.Routes = append(ingressRoute.Spec.Routes, []traefikv1alpha1.Route{

{

Match: fmt.Sprintf("Host(`%s`) && PathPrefix(`%s`)", parsedUrl.Host, parsedUrl.Path),

Kind: "Rule",

Services: []traefikv1alpha1.Service{

{

LoadBalancerSpec: traefikv1alpha1.LoadBalancerSpec{

Name: instance.Name,

Port: intstr.FromString("server"),

},

},

},

Middlewares: []traefikv1alpha1.MiddlewareRef{

{

Name: instance.Name,

},

},

},

{

Match: fmt.Sprintf("Host(`%s`) && (PathPrefix(`/static-files`) || PathPrefix(`/ajax-api`))", parsedUrl.Host),

Kind: "Rule",

Services: []traefikv1alpha1.Service{

{

LoadBalancerSpec: traefikv1alpha1.LoadBalancerSpec{

Name: instance.Name,

Port: intstr.FromString("server"),

},

},

},

},

}...)

}

return ingressRoute

}

func middlewareForTrackingServer(instance *experimentv1alpha2.TrackingServer, parsedUrl *url.URL) *traefikv1alpha1.Middleware {

middleware := &traefikv1alpha1.Middleware{

ObjectMeta: metav1.ObjectMeta{

Name: instance.Name,

Namespace: instance.Namespace,

},

Spec: traefikv1alpha1.MiddlewareSpec{

StripPrefix: &dynamic.StripPrefix{

Prefixes: []string{parsedUrl.Path},

},

},

}

return middleware

}

其他资源的调谐基本也是这样的逻辑。

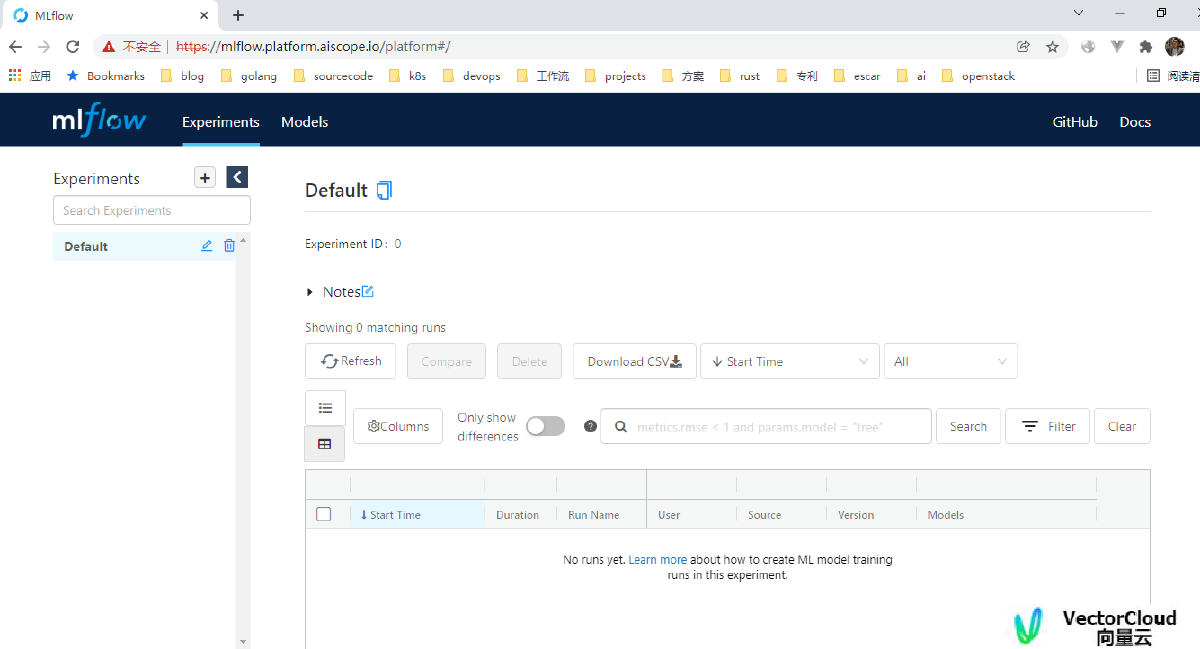

现在将控制器运行起来,看看资源创建后的结果。

首先将CRD资源创建好

sukai@SuKai:/mnt/g/ai/aiscope/config/crd$ cd /mnt/g/ai/aiscope/config/crd

sukai@SuKai:/mnt/g/ai/aiscope/config/crd$ ls bases/

experiment.aiscope_codeservers.yaml iam.aiscope_globalroles.yaml iam.aiscope_users.yaml

experiment.aiscope_jupyternotebooks.yaml iam.aiscope_groupbindings.yaml iam.aiscope_workspacerolebindings.yaml

experiment.aiscope_trackingservers.yaml iam.aiscope_groups.yaml iam.aiscope_workspaceroles.yaml

iam.aiscope_globalrolebindings.yaml iam.aiscope_loginrecords.yaml tenant.aiscope_workspaces.yaml

sukai@SuKai:/mnt/g/ai/aiscope/config/crd$ kubectl apply -f bases/

运行controller

PS G:\ai\aiscope> go run .\cmd\controller-manager\controller-manager.go

创建证书

HOST=mlflow.platform.aiscope.io

KEY_FILE=tls.key

CERT_FILE=tls.cert

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout ${KEY_FILE} -out ${CERT_FILE} -subj "/CN=${HOST}/O=${HOST}"

创建TrackingServer实例

apiVersion: experiment.aiscope/v1alpha2

kind: TrackingServer

metadata:

name: trackingserver

namespace: aiscope-devops-platform

spec:

size: 1

image: mlflow:aiscope

s3_endpoint_url: "http://s3.platform.aiscope.io/"

aws_access_key: "mlflow"

aws_secret_key: "mlflow1234"

artifact_root: "s3://mlflow/"

backend_uri: "sqlite:////mlflow/mlflow.db"

url: "https://mlflow.platform.aiscope.io/platform"

volumeSize: ""

storageClassName: ""

cert: |

-----BEGIN CERTIFICATE-----

MIIDdTCCAl2gAwIBAgIUekacMOsjvwjsy+7eQEFPSyQG3KwwDQYJKoZIhvcNAQEL

BQAwSjEjMCEGA1UEAwwabWxmbG93LnBsYXRmb3JtLmFpc2NvcGUuaW8xIzAhBgNV

BAoMGm1sZmxvdy5wbGF0Zm9ybS5haXNjb3BlLmlvMB4XDTIyMDEyMDEyMDA0OFoX

DTIzMDEyMDEyMDA0OFowSjEjMCEGA1UEAwwabWxmbG93LnBsYXRmb3JtLmFpc2Nv

cGUuaW8xIzAhBgNVBAoMGm1sZmxvdy5wbGF0Zm9ybS5haXNjb3BlLmlvMIIBIjAN

BgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAm5ipmsir1k1eAsECC80rRgxGbg8Q

dBc+fEhtu6DxbKDD2OOsa8VPvls/Aq9/EaU3imouCXZfbUhCSr6L/CwlY8GGiBIB

cfJscUrGarIak61mJxS4UVtaKYf9eVhyzFWLCcNfZA2WfPDe2jQjGPOc0Z4cQuI+

1A7u7oVYlWUMJ0DfVdXod32NvGr8vzbHFMhvn6nyzFZQbna6BwPF/hL84OeoNv+o

wknkRx/Se4psuEs0jh7WaM9QhfHVNG6YzSXoJzwRPFPMmN41Ac0eQcLE9VdM7u/d

VhppteZffEEHTifvVW53dq2IzE/TWO5q3+3fTweq25oscubYWQIElob1FQIDAQAB

o1MwUTAdBgNVHQ4EFgQURAC2XSoTpHFgdd3S/uBuQr2KudIwHwYDVR0jBBgwFoAU

RAC2XSoTpHFgdd3S/uBuQr2KudIwDwYDVR0TAQH/BAUwAwEB/zANBgkqhkiG9w0B

AQsFAAOCAQEALszXkPFtyI0s53c4rXWFJxKlZKZrR2+OTrJDG6wwIB7AGlGuPeDm

YrOINqm6Abc16pEyFLrivuFM471mS5zd3pFr725B2j6LR8e4+vUwmNaUTDO0HzOS

vpE5bfxHO+9YPuGPfp+rbg9lr6QqeOzBuV4G16b4RIhIou4SIQwjIOyoNlczDNUB

qOt4YR9y8lJcHFgyqZWdtouUrBmkRjw3VQrN5tLwc7uYt5dSebQ6qwKf0cMCYA/i

oij+SxzMfurUQeyH3sFNLTHiqMdCUMFayr4GM+xTU+Zw1eEgmS1+7TfJSP6EKPzp

sqaHacGN85vBjOi66Is+JbAoPrJy0jzluw==

-----END CERTIFICATE-----

key: |

-----BEGIN PRIVATE KEY-----

MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCbmKmayKvWTV4C

wQILzStGDEZuDxB0Fz58SG27oPFsoMPY46xrxU++Wz8Cr38RpTeKai4Jdl9tSEJK

vov8LCVjwYaIEgFx8mxxSsZqshqTrWYnFLhRW1oph/15WHLMVYsJw19kDZZ88N7a

NCMY85zRnhxC4j7UDu7uhViVZQwnQN9V1eh3fY28avy/NscUyG+fqfLMVlBudroH

A8X+Evzg56g2/6jCSeRHH9J7imy4SzSOHtZoz1CF8dU0bpjNJegnPBE8U8yY3jUB

zR5BwsT1V0zu791WGmm15l98QQdOJ+9Vbnd2rYjMT9NY7mrf7d9PB6rbmixy5thZ

AgSWhvUVAgMBAAECggEAQAiszBmHtnMynFmIGQk/pN1KYuLqN4yVV5qLJmuOz9C9

qNXR0KxsK//rR5Sn68AdwmX+OkCv9w6E0bPncklMvegYEImwdI97F4jZbXGMxHfZ

EX5SeJDq4yqnIzhGTldqGAOCj2+UHikW3aAVTaB8SjwSj2gCyUy4Agt4sErcnI5O

0PduZaz1p/lDFJBEAq1m76tRoFryzsZdDbgyS+6ZIr8mXrw74m6IKYrhrrcmjcZL

cWJpSjH73NhstwEzpaqVdq+i4ti4mQN09/hq+rEmwQVO24lxgJt4qXv/YQVZveUu

5Sq5kqSwHwK6L0rwiMoPN7GZ/a70hAURZpPSijPhgQKBgQDKexionUZfUFvL9Q5y

D+nVvSPEo9dPpjdKLgLYsG/TSOWhqe5PG6PAQn57JYkl/1z5QGdMFWK5u6/rAk/A

4duwzopTe1eX2ASusLxxAbcCQvwF8yKnxSR4ylBOMcH5cg1fjeeJIk3HTxEuGS/A

cPsViPostBxJEoyKA3yKK33VoQKBgQDEuR4NNCydK+8xkdSl5ew5r6CaMg7qD9A7

6vzr2/wqT9cJY+CuNLxFB4jGSbluY4kROuMIxxi9L34zXPAvh01p5YV3dDOE83V0

P/xnLA4jgpp0fPiRHOza4YmpyLqKbRvawDZsz+pMbmay0mizh2v6U27VOmstntmT

bWZ8nEZC9QKBgQC/sGHb32kr+wusv0b5vQ8HBpTCKMpB4X4egAi50+9J+41Jy3KM

+gHAljLfqt14V5VRcyNX6Dca1xDoT7vpQumVLTPPbMm0OxHTwAXmhlUXkwq9Jzz7

z6uDnyT/oiOdX9hBSjqUnSE9OyFsnvOSIGPUM2WExM6ybxkV388bj6kFgQKBgG+X

cuyahBREL9M2niHdYzr13WyaqMstXTof+ojwqQJ3d8vj1Df9wi6GL5gLihyMadxU

QyVfizEGF9ibB8RuRAOmJyezyuXIFQB0q4D7BKowE92wZnAqsFEZTzX4n5iWfA6C

qlzfNFFW7vrRUINGdoHxghWCpfmi+lke3dwh6dlhAoGBALiAsKf6dktr/utuGthg

WwYt6ECGQ/0O+yPGtND6kYKC/A50Vg8zUVTzJpmZluAWCoMUcfpY5QqD42fZaQV4

iBn2SNmcvduQDkosLo1eZcrZxbHlFkMzkvMh/8aQHQZQokIS6RR3fUiZK7+JV7Op

X8sS5WsPy440naXhM+Lk8Y3H

-----END PRIVATE KEY-----

sukai@SuKai:/mnt/g/ai/aiscope/config$ cd samples/

sukai@SuKai:/mnt/g/ai/aiscope/config/samples$ ls

experiment_v1alpha2_codeserver.yaml iam_v1alpha2_globalrolebinding.yaml iam_v1alpha2_user.yaml

experiment_v1alpha2_jupyternotebook.yaml iam_v1alpha2_group.yaml iam_v1alpha2_workspacerole.yaml

experiment_v1alpha2_trackingserver.yaml iam_v1alpha2_groupbinding.yaml iam_v1alpha2_workspacerolebinding.yaml

iam_v1alpha2_globalrole.yaml iam_v1alpha2_loginrecord.yaml tenant_v1alpha2_workspace.yaml

sukai@SuKai:/mnt/g/ai/aiscope/config/samples$ kubectl apply -f experiment_v1alpha2_trackingserver.yaml

trackingserver.experiment.aiscope/trackingserver created

sukai@SuKai:/mnt/g/ai/aiscope/config/samples$

查看资源

sukai@SuKai:~$ kubectl -n aiscope-devops-platform get trackingserver

NAME S3_ENDPOINT_URL ARTIFACT_ROOT

trackingserver http://s3.platform.aiscope.io/ s3://mlflow/

sukai@SuKai:~$ kubectl -n aiscope-devops-platform describe trackingserver trackingserver

Name: trackingserver

Namespace: aiscope-devops-platform

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"experiment.aiscope/v1alpha2","kind":"TrackingServer","metadata":{"annotations":{},"name":"trackingserver","namespace":"aisc...

API Version: experiment.aiscope/v1alpha2

Kind: TrackingServer

Metadata:

Creation Timestamp: 2022-01-23T11:54:07Z

Finalizers:

finalizers.aiscope.io/trackingserver

Generation: 1

Managed Fields:

API Version: experiment.aiscope/v1alpha2

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:finalizers:

.:

v:"finalizers.aiscope.io/trackingserver":

Manager: controller-manager.exe

Operation: Update

Time: 2022-01-23T11:54:07Z

API Version: experiment.aiscope/v1alpha2

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:artifact_root:

f:aws_access_key:

f:aws_secret_key:

f:backend_uri:

f:cert:

f:image:

f:key:

f:s3_endpoint_url:

f:size:

f:url:

Manager: kubectl

Operation: Update

Time: 2022-01-23T11:54:07Z

Resource Version: 108941

UID: fff12ab5-3043-4724-805a-9b5733afb98f

Spec:

artifact_root: s3://mlflow/

aws_access_key: mlflow

aws_secret_key: mlflow1234

backend_uri: sqlite:////mlflow/mlflow.db

Cert: -----BEGIN CERTIFICATE-----

MIIDdTCCAl2gAwIBAgIUekacMOsjvwjsy+7eQEFPSyQG3KwwDQYJKoZIhvcNAQEL

BQAwSjEjMCEGA1UEAwwabWxmbG93LnBsYXRmb3JtLmFpc2NvcGUuaW8xIzAhBgNV

BAoMGm1sZmxvdy5wbGF0Zm9ybS5haXNjb3BlLmlvMB4XDTIyMDEyMDEyMDA0OFoX

DTIzMDEyMDEyMDA0OFowSjEjMCEGA1UEAwwabWxmbG93LnBsYXRmb3JtLmFpc2Nv

cGUuaW8xIzAhBgNVBAoMGm1sZmxvdy5wbGF0Zm9ybS5haXNjb3BlLmlvMIIBIjAN

BgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAm5ipmsir1k1eAsECC80rRgxGbg8Q

dBc+fEhtu6DxbKDD2OOsa8VPvls/Aq9/EaU3imouCXZfbUhCSr6L/CwlY8GGiBIB

cfJscUrGarIak61mJxS4UVtaKYf9eVhyzFWLCcNfZA2WfPDe2jQjGPOc0Z4cQuI+

1A7u7oVYlWUMJ0DfVdXod32NvGr8vzbHFMhvn6nyzFZQbna6BwPF/hL84OeoNv+o

wknkRx/Se4psuEs0jh7WaM9QhfHVNG6YzSXoJzwRPFPMmN41Ac0eQcLE9VdM7u/d

VhppteZffEEHTifvVW53dq2IzE/TWO5q3+3fTweq25oscubYWQIElob1FQIDAQAB

o1MwUTAdBgNVHQ4EFgQURAC2XSoTpHFgdd3S/uBuQr2KudIwHwYDVR0jBBgwFoAU

RAC2XSoTpHFgdd3S/uBuQr2KudIwDwYDVR0TAQH/BAUwAwEB/zANBgkqhkiG9w0B

AQsFAAOCAQEALszXkPFtyI0s53c4rXWFJxKlZKZrR2+OTrJDG6wwIB7AGlGuPeDm

YrOINqm6Abc16pEyFLrivuFM471mS5zd3pFr725B2j6LR8e4+vUwmNaUTDO0HzOS

vpE5bfxHO+9YPuGPfp+rbg9lr6QqeOzBuV4G16b4RIhIou4SIQwjIOyoNlczDNUB

qOt4YR9y8lJcHFgyqZWdtouUrBmkRjw3VQrN5tLwc7uYt5dSebQ6qwKf0cMCYA/i

oij+SxzMfurUQeyH3sFNLTHiqMdCUMFayr4GM+xTU+Zw1eEgmS1+7TfJSP6EKPzp

sqaHacGN85vBjOi66Is+JbAoPrJy0jzluw==

-----END CERTIFICATE-----

Image: mlflow:aiscope

Key: -----BEGIN PRIVATE KEY-----

MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCbmKmayKvWTV4C

wQILzStGDEZuDxB0Fz58SG27oPFsoMPY46xrxU++Wz8Cr38RpTeKai4Jdl9tSEJK

vov8LCVjwYaIEgFx8mxxSsZqshqTrWYnFLhRW1oph/15WHLMVYsJw19kDZZ88N7a

NCMY85zRnhxC4j7UDu7uhViVZQwnQN9V1eh3fY28avy/NscUyG+fqfLMVlBudroH

A8X+Evzg56g2/6jCSeRHH9J7imy4SzSOHtZoz1CF8dU0bpjNJegnPBE8U8yY3jUB

zR5BwsT1V0zu791WGmm15l98QQdOJ+9Vbnd2rYjMT9NY7mrf7d9PB6rbmixy5thZ

AgSWhvUVAgMBAAECggEAQAiszBmHtnMynFmIGQk/pN1KYuLqN4yVV5qLJmuOz9C9

qNXR0KxsK//rR5Sn68AdwmX+OkCv9w6E0bPncklMvegYEImwdI97F4jZbXGMxHfZ

EX5SeJDq4yqnIzhGTldqGAOCj2+UHikW3aAVTaB8SjwSj2gCyUy4Agt4sErcnI5O

0PduZaz1p/lDFJBEAq1m76tRoFryzsZdDbgyS+6ZIr8mXrw74m6IKYrhrrcmjcZL

cWJpSjH73NhstwEzpaqVdq+i4ti4mQN09/hq+rEmwQVO24lxgJt4qXv/YQVZveUu

5Sq5kqSwHwK6L0rwiMoPN7GZ/a70hAURZpPSijPhgQKBgQDKexionUZfUFvL9Q5y

D+nVvSPEo9dPpjdKLgLYsG/TSOWhqe5PG6PAQn57JYkl/1z5QGdMFWK5u6/rAk/A

4duwzopTe1eX2ASusLxxAbcCQvwF8yKnxSR4ylBOMcH5cg1fjeeJIk3HTxEuGS/A

cPsViPostBxJEoyKA3yKK33VoQKBgQDEuR4NNCydK+8xkdSl5ew5r6CaMg7qD9A7

6vzr2/wqT9cJY+CuNLxFB4jGSbluY4kROuMIxxi9L34zXPAvh01p5YV3dDOE83V0

P/xnLA4jgpp0fPiRHOza4YmpyLqKbRvawDZsz+pMbmay0mizh2v6U27VOmstntmT

bWZ8nEZC9QKBgQC/sGHb32kr+wusv0b5vQ8HBpTCKMpB4X4egAi50+9J+41Jy3KM

+gHAljLfqt14V5VRcyNX6Dca1xDoT7vpQumVLTPPbMm0OxHTwAXmhlUXkwq9Jzz7

z6uDnyT/oiOdX9hBSjqUnSE9OyFsnvOSIGPUM2WExM6ybxkV388bj6kFgQKBgG+X

cuyahBREL9M2niHdYzr13WyaqMstXTof+ojwqQJ3d8vj1Df9wi6GL5gLihyMadxU

QyVfizEGF9ibB8RuRAOmJyezyuXIFQB0q4D7BKowE92wZnAqsFEZTzX4n5iWfA6C

qlzfNFFW7vrRUINGdoHxghWCpfmi+lke3dwh6dlhAoGBALiAsKf6dktr/utuGthg

WwYt6ECGQ/0O+yPGtND6kYKC/A50Vg8zUVTzJpmZluAWCoMUcfpY5QqD42fZaQV4

iBn2SNmcvduQDkosLo1eZcrZxbHlFkMzkvMh/8aQHQZQokIS6RR3fUiZK7+JV7Op

X8sS5WsPy440naXhM+Lk8Y3H

-----END PRIVATE KEY-----

s3_endpoint_url: http://s3.platform.aiscope.io/

Size: 1

URL: https://mlflow.platform.aiscope.io/platform

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Synced 3m45s (x2 over 3m46s) trackingserver-controller Trackingserver synced successfully

Warning FailedSync 3m45s trackingserver-controller Failed to sync: Operation cannot be fulfilled on deployments.apps "trackingserver": the object has been modified; please apply your changes to the latest version and try again

sukai@SuKai:~$ kubectl -n aiscope-devops-platform get pods

NAME READY STATUS RESTARTS AGE

mlflow-0 1/1 Running 4 (5h41m ago) 46h

trackingserver-8f8f8744b-p2v9v 1/1 Running 0 58s

sukai@SuKai:~$ kubectl -n aiscope-devops-platform get ingressroute

NAME AGE

trackingserver 71s

sukai@SuKai:~$ kubectl -n aiscope-devops-platform get ingressroute trackingserver -o yaml

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

creationTimestamp: "2022-01-23T11:54:07Z"

generation: 1

managedFields:

- apiVersion: traefik.containo.us/v1alpha1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:ownerReferences:

.: {}

k:{"uid":"fff12ab5-3043-4724-805a-9b5733afb98f"}: {}

f:spec:

.: {}

f:entryPoints: {}

f:routes: {}

f:tls:

.: {}

f:secretName: {}

manager: controller-manager.exe

operation: Update

time: "2022-01-23T11:54:07Z"

name: trackingserver

namespace: aiscope-devops-platform

ownerReferences:

- apiVersion: experiment.aiscope/v1alpha2

blockOwnerDeletion: true

controller: true

kind: TrackingServer

name: trackingserver

uid: fff12ab5-3043-4724-805a-9b5733afb98f

resourceVersion: "108950"

uid: a6b51bb3-6c93-468b-8443-9f4a1daba18d

spec:

entryPoints:

- websecure

- web

routes:

- kind: Rule

match: Host(`mlflow.platform.aiscope.io`) && PathPrefix(`/platform`)

middlewares:

- name: trackingserver

services:

- name: trackingserver

port: server

- kind: Rule

match: Host(`mlflow.platform.aiscope.io`) && (PathPrefix(`/static-files`) || PathPrefix(`/ajax-api`))

services:

- name: trackingserver

port: server

tls:

secretName: trackingserver

sukai@SuKai:~$ kubectl -n aiscope-devops-platform get svc trackingserver -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2022-01-23T11:54:07Z"

labels:

app: trackingserver

ts_name: trackingserver

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:labels:

.: {}

f:app: {}

f:ts_name: {}

f:ownerReferences:

.: {}

k:{"uid":"fff12ab5-3043-4724-805a-9b5733afb98f"}: {}

f:spec:

f:internalTrafficPolicy: {}

f:ports:

.: {}

k:{"port":5000,"protocol":"TCP"}:

.: {}

f:name: {}

f:port: {}

f:protocol: {}

f:targetPort: {}

f:selector: {}

f:sessionAffinity: {}

f:type: {}

manager: controller-manager.exe

operation: Update

time: "2022-01-23T11:54:07Z"

name: trackingserver

namespace: aiscope-devops-platform

ownerReferences:

- apiVersion: experiment.aiscope/v1alpha2

blockOwnerDeletion: true

controller: true

kind: TrackingServer

name: trackingserver

uid: fff12ab5-3043-4724-805a-9b5733afb98f

resourceVersion: "108945"

uid: d753d133-c025-41f1-8126-55e5f83217f6

spec:

clusterIP: 10.20.131.219

clusterIPs:

- 10.20.131.219

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: server

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: trackingserver

ts_name: trackingserver

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

sukai@SuKai:~$