由 SuKai August 6, 2021

在训练神经网络的时候,调节超参数是必不可少的,这个过程可以更科学地训练出更高效的机器学习模型。一般我们都是通过观察在训练过程中的监测指标如损失函数的值或者测试/验证集上的准确率来判断这个模型的训练状态,并通过修改超参数来提高模型效率。

本篇介绍如何通过开源组件Optuna, Hydra, MLflow构建一个超参数优化实验环境。

超参数

常规参数是在训练期间通过机器学习算法学习的参数,这些参数可以通过训练来优化。而超参数是设置如何训练模型的参数,它们有助于训练出更好的模型,超参数不能通过训练来优化。

超参优化

超参数优化是指不是依赖人工调参,而是通过一定算法找出优化算法/机器学习/深度学习中最优/次优超参数的一类方法。HPO的本质是生成多组超参数,一次次地去训练,根据获取到的评价指标等调节再生成超参数组再训练。

Optuna

Optuna是机器学习的自动超参数优化框架,使用了采样和剪枝算法来优化超参数,快速而且高效,动态构建超参数搜索空间。

Optuna是一个超参数的优化工具,对基于树的超参数搜索进行了优化,它使用被称为TPESampler “Tree-structured Parzen Estimator”的方法,这种方法依靠贝叶斯概率来确定哪些超参数选择是最有希望的并迭代调整搜索。

Hydra

Facebook Hydra 允许开发人员通过编写和覆盖配置来简化 Python 应用程序(尤其是机器学习方面)的开发。开发人员可以借助Hydra,通过更改配置文件来更改产品的行为方式,而不是通过更改代码来适应新的用例。

Hydra提供了一种灵活的方法来开发和维护代码及配置,从而加快了机器学习研究等领域中复杂应用程序的开发。 它允许开发人员从命令行或配置文件“组合”应用程序的配置。这解决了在修改配置时可能出现的问题,例如:

维护配置的稍微不同的副本或添加逻辑以覆盖配置值。 可以在运行应用程序之前就组成和覆盖配置。 动态命令行选项卡完成功能可帮助开发人员发现复杂配置并减少错误。 可以在本地或远程启动应用程序,使用户可以利用更多的本地资源。

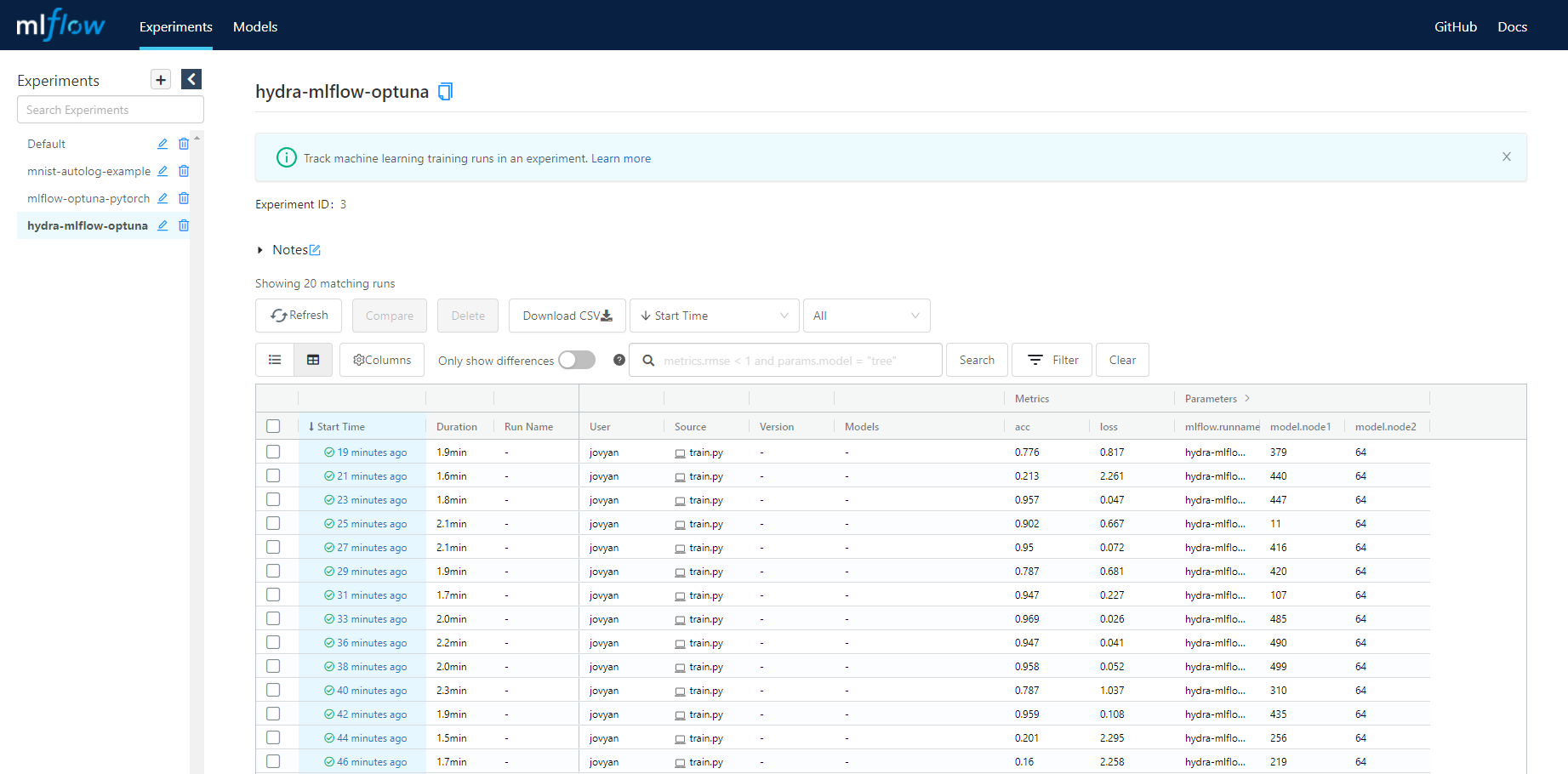

MLflow Tracking

MLflow 是一个开放源代码库,用于管理机器学习试验的生命周期。 MLFlow 跟踪是 MLflow 的一个组件,它可以记录和跟踪训练运行指标及模型项目,无论试验环境是在本地计算机上、远程计算目标上、虚拟机上,还是在 Azure Databricks 群集上。

执行过程

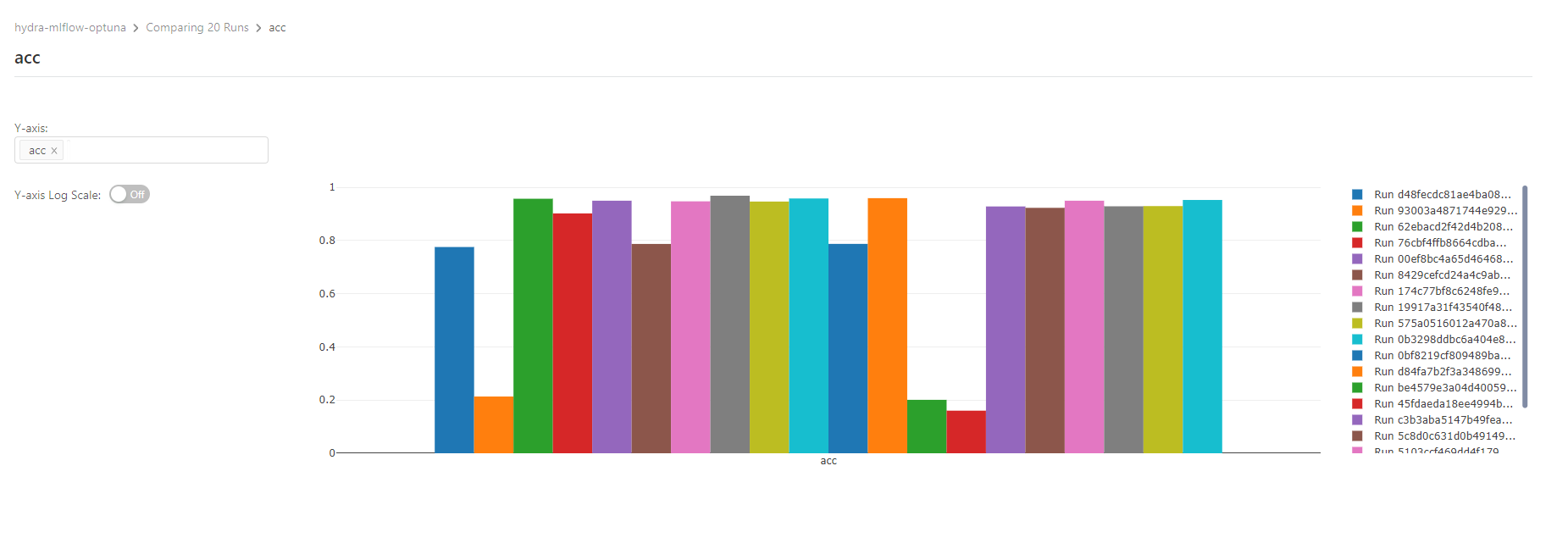

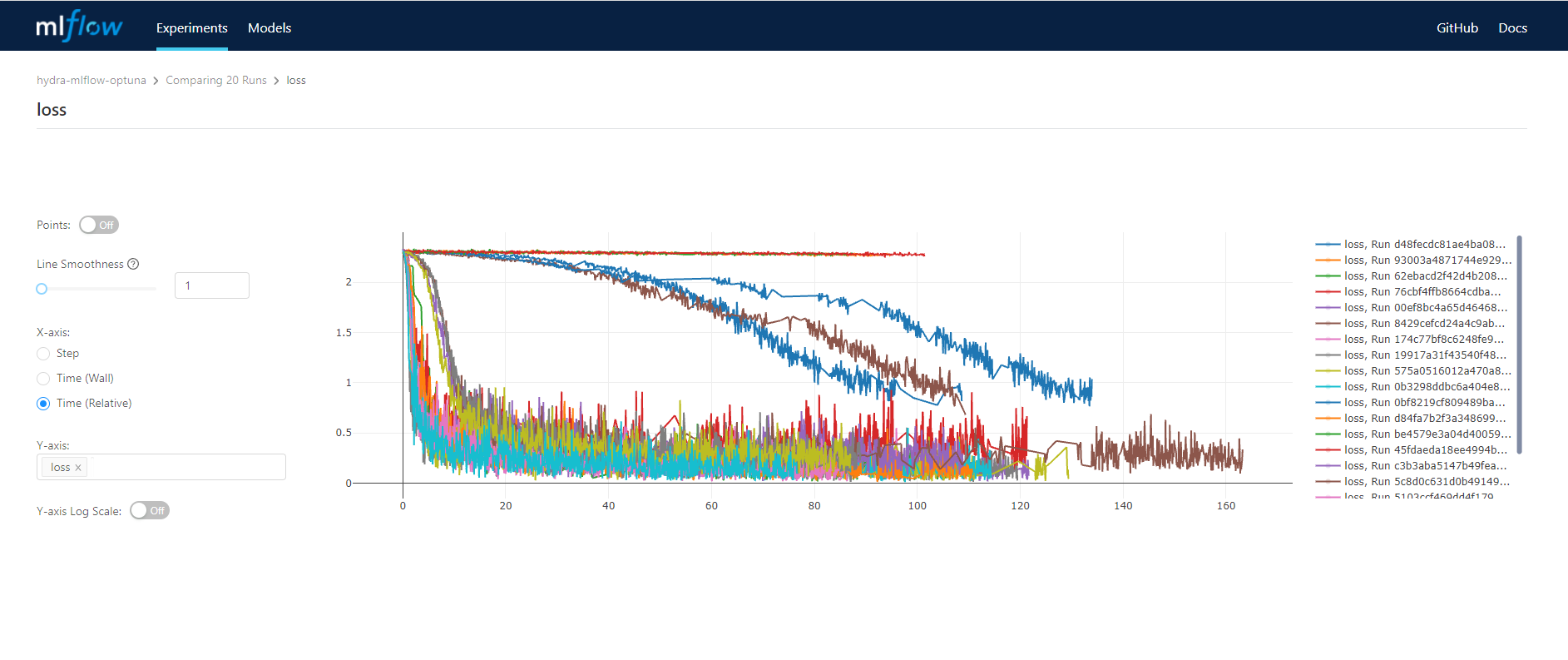

实验跟踪记录

Optuna

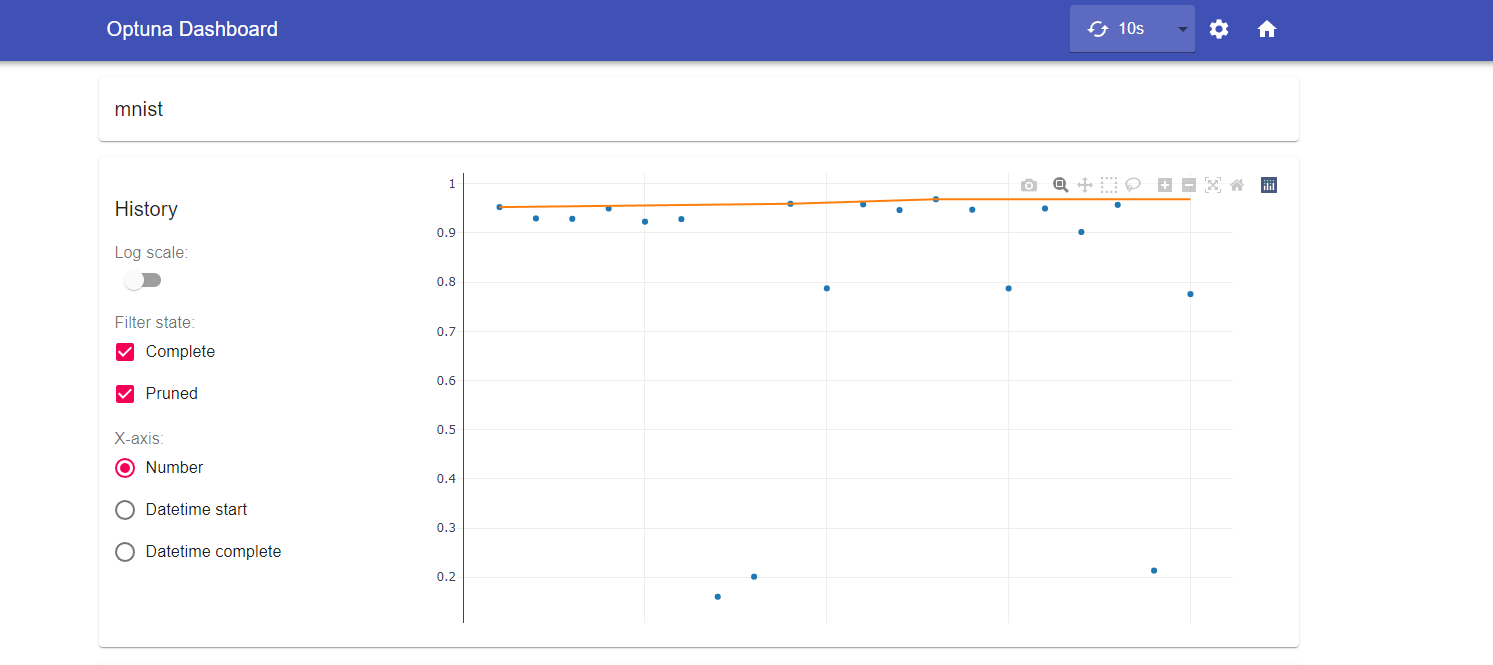

优化历史

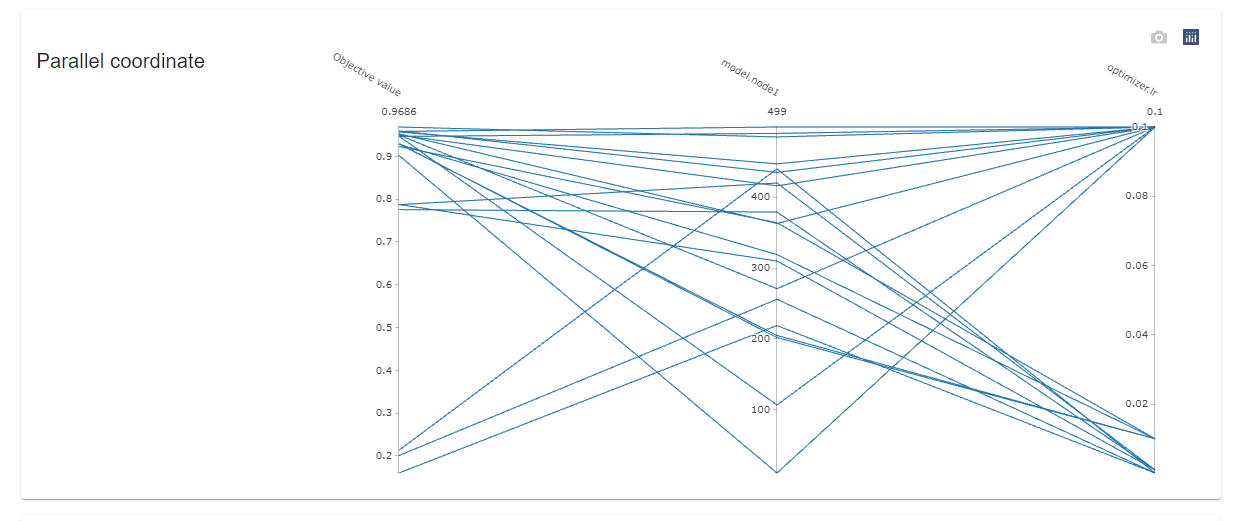

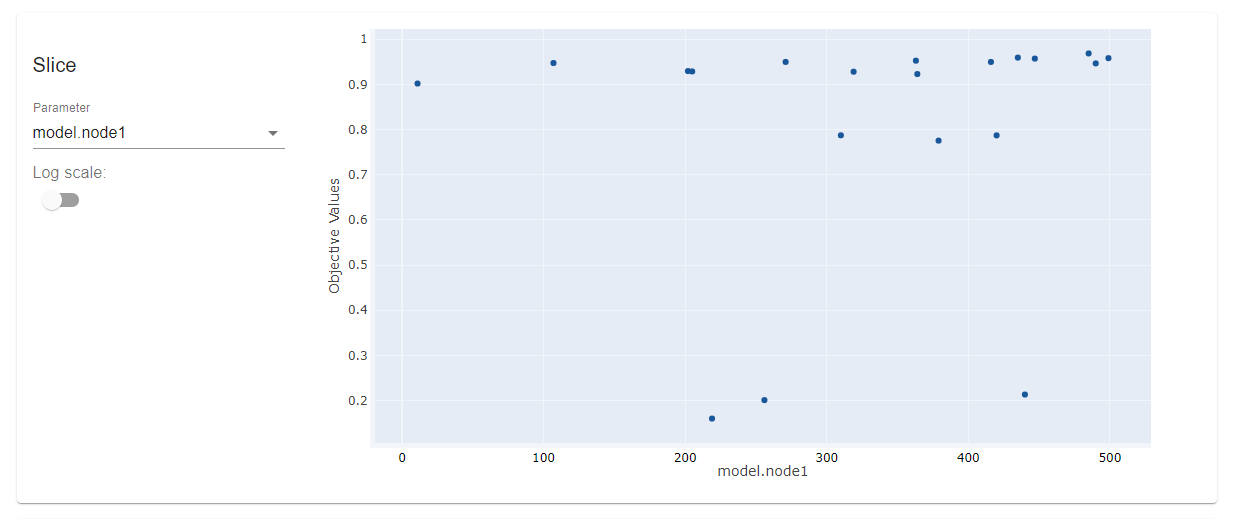

参数关系

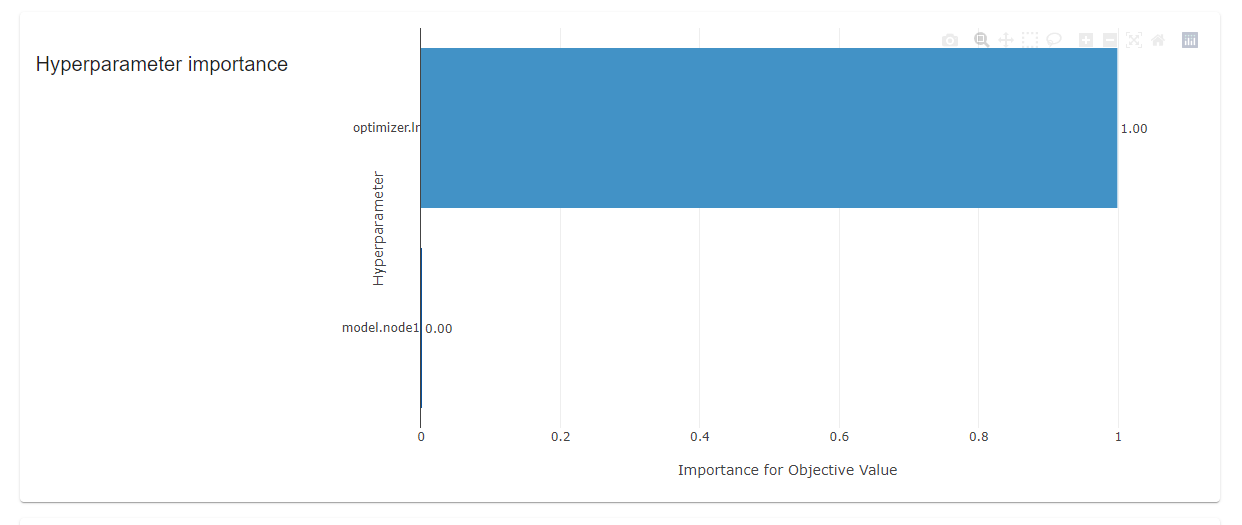

参数重要性

参数列表

环境部署

optuna-dashboard

Dockerfile

FROM node:14 AS front-builder

WORKDIR /usr/src

ADD ./package.json /usr/src/package.json

ADD ./package-lock.json /usr/src/package-lock.json

RUN npm install --registry=https://registry.npm.taobao.org

ADD ./tsconfig.json /usr/src/tsconfig.json

ADD ./webpack.config.js /usr/src/webpack.config.js

ADD ./optuna_dashboard/static/ /usr/src/optuna_dashboard/static

RUN mkdir -p /usr/src/optuna_dashboard/public

RUN npm run build:prd

FROM python:3.8-buster AS python-builder

WORKDIR /usr/src

RUN pip install --upgrade pip setuptools -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install --progress-bar off PyMySQL cryptography psycopg2-binary -i https://pypi.tuna.tsinghua.edu.cn/simple

ADD ./setup.cfg /usr/src/setup.cfg

ADD ./setup.py /usr/src/setup.py

ADD ./optuna_dashboard /usr/src/optuna_dashboard

COPY --from=front-builder /usr/src/optuna_dashboard/public/ /usr/src/optuna_dashboard/public/

RUN pip install --progress-bar off . -i https://pypi.tuna.tsinghua.edu.cn/simple

FROM python:3.8-slim-buster as runner

COPY --from=python-builder /usr/local/lib/python3.8/site-packages /usr/local/lib/python3.8/site-packages

COPY --from=python-builder /usr/local/bin/optuna-dashboard /usr/local/bin/optuna-dashboard

EXPOSE 8080

ENTRYPOINT ["optuna-dashboard", "--port", "8080", "--host", "0.0.0.0"]

k8s deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: optuna-dashboard

namespace: ai

spec:

selector:

matchLabels:

app: optuna-dashboard

replicas: 1 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: optuna-dashboard

spec:

containers:

- name: optuna-dashboard

image: 192.168.0.93/ai/optuna-dashboard:v1

ports:

- containerPort: 8080

command: ["optuna-dashboard"]

args: ["mysql+pymysql://root:password@192.168.0.1:3306/optuna", "--port", "8080", "--host", "0.0.0.0"]

---

apiVersion: v1

kind: Service

metadata:

name: optuna-dashboard

namespace: ai

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

selector:

app: optuna-dashboard

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: optuna-dashboard

namespace: ai

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: optuna.platform.dilu.com

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

service:

name: optuna-dashboard

port:

name: http

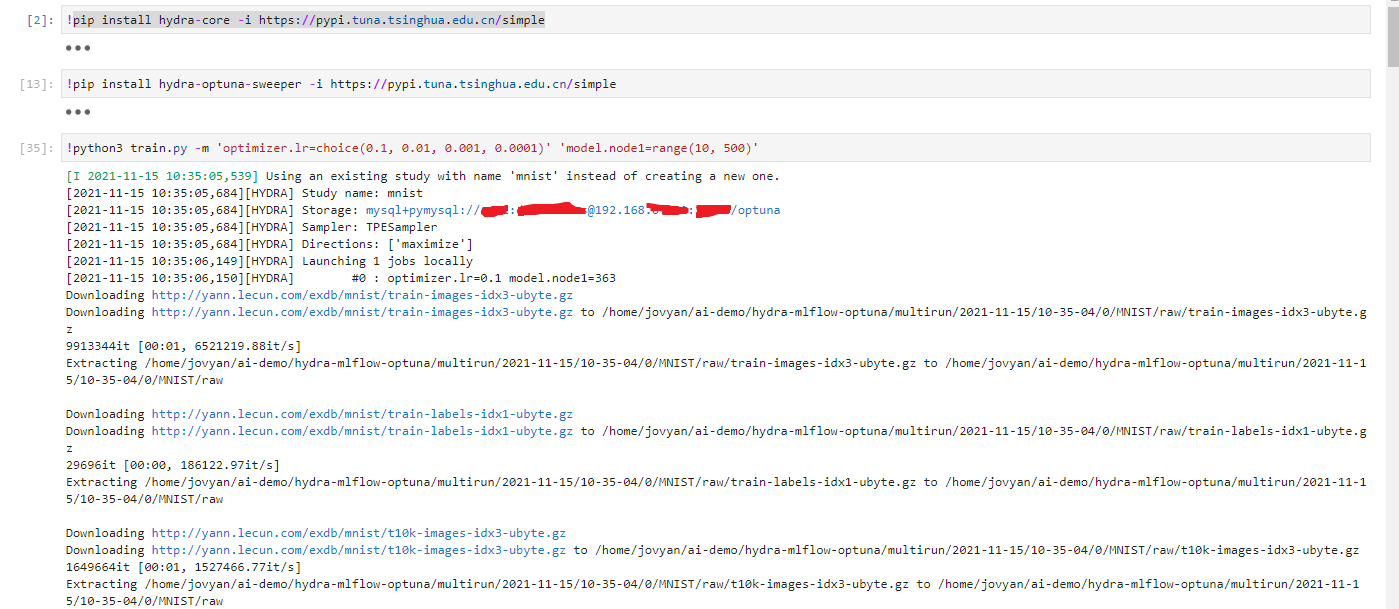

代码示例

model.py

import os

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torchvision import transforms

class SAMPLE_DNN(nn.Module):

def __init__(self, cfg):

super(SAMPLE_DNN, self).__init__()

self.model = nn.Sequential(nn.Linear(28 * 28, cfg.model.node1),

nn.ReLU(),

nn.Linear(cfg.model.node1, cfg.model.node2),

nn.ReLU(),

nn.Linear(cfg.model.node2, 10))

def forward(self, x):

return self.model(x.view(x.size(0), -1))

train.py

import os

import sys

from omegaconf import DictConfig, ListConfig

import hydra

from hydra import utils

import mlflow

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import transforms

from torchvision.datasets import MNIST

from torch.utils.data import DataLoader, random_split

from model import SAMPLE_DNN

os.environ["AWS_ACCESS_KEY_ID"] = "mlflow"

os.environ["AWS_SECRET_ACCESS_KEY"] = "mlflow"

os.environ["MLFLOW_S3_ENDPOINT_URL"] = f"http://s3.platform.dilu.com/"

#os.environ["HYDRA_FULL_ERROR"]="1"

#os.environ["MLFLOW_TRACKING_INSECURE_TLS"] = "true"

#os.environ["MLFLOW_TRACKING_URI"] = f"http://mlflow.platform.dilu.com/"

#mlflow.set_experiment(experiment_name='mlflow-optuna-pytorch')

def log_params_from_omegaconf_dict(params):

for param_name, element in params.items():

_explore_recursive(param_name, element)

def _explore_recursive(parent_name, element):

if isinstance(element, DictConfig):

for k, v in element.items():

if isinstance(v, DictConfig) or isinstance(v, ListConfig):

_explore_recursive(f'{parent_name}.{k}', v)

else:

mlflow.log_param(f'{parent_name}.{k}', v)

elif isinstance(element, ListConfig):

for i, v in enumerate(element):

mlflow.log_param(f'{parent_name}.{i}', v)

@hydra.main(config_path='conf', config_name='config')

def main(cfg):

dataset = MNIST(os.getcwd(), download=True, transform=transforms.ToTensor())

train, val = random_split(dataset, [55000, 5000])

trainloader = DataLoader(train, batch_size=cfg.train.batch_size, shuffle=True)

testloader = DataLoader(val, batch_size=cfg.test.batch_size, shuffle=False)

model = SAMPLE_DNN(cfg)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=cfg.optimizer.lr,

momentum=cfg.optimizer.momentum)

mlflow.set_tracking_uri(f'http://mlflow.platform.dilu.com/')

mlflow.set_experiment(cfg.mlflow.runname)

with mlflow.start_run():

for epoch in range(cfg.train.epoch):

running_loss = 0.0

log_params_from_omegaconf_dict(cfg)

for i, (x, y) in enumerate(trainloader):

steps = epoch * len(trainloader) + i

optimizer.zero_grad()

outputs = model(x)

loss = criterion(outputs, y)

loss.backward()

optimizer.step()

running_loss += loss.item()

mlflow.log_metric("loss", loss.item(), step=steps)

correct = 0

total = 0

with torch.no_grad():

for (x, y) in testloader:

outputs = model(x)

_, predicted = torch.max(outputs.data, 1)

total += y.size(0)

correct += (predicted == y).sum().item()

accuracy = float(correct / total)

mlflow.log_metric("acc", accuracy, step=epoch)

return accuracy

if __name__ == '__main__':

main()

config.yaml

# config.yaml

model:

node1: 128

node2: 64

optimizer:

lr: 0.001

momentum: 0.9

train:

epoch: 1

batch_size: 64

test:

batch_size: 64

mlflow:

runname: "hydra-mlflow-optuna"

defaults:

- override hydra/sweeper: optuna

- override hydra/sweeper/sampler: tpe

hydra:

sweeper:

direction: maximize

study_name: mnist

storage: "mysql+pymysql://root:password@mysqlhost:3306/optuna"

n_trials: 20

n_jobs: 1

sampler:

seed: 123